반응형

Avro

Avro 란

Avro란 Apache에서 만든 프레임워크로 데이터 직렬화 기능을 제공한다.

JSON과 비슷한 형식이지만, 스키마가 존재한다.

Avro = schema + binary(json value)

장단점

| 장점 | 단점 |

|

|

DataType

Primitive

- null

- boolean

- int

- long

- float

- double

- bytes

- string

Complex

- record

- name (M): name of record, String

- namespace (O): 패키지, String

- doc (O): record documentation, String

- aliases (O): alias 지정, String

- fields (M): record의 속성들, Array

- name (M): field name, String

- doc (O): field documentation, String

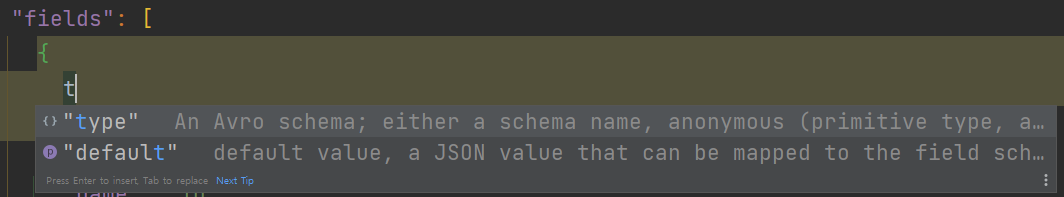

- type (M): dataType of field

- default (O, default): field의 default 값 지정

- order (O): ascending, descending, ignore

- aliases: field alias 지정, String

Enums

- name (M): name of record, String

- namespace (O): 패키지, String

- doc (O): record documentation, String

- aliases (O): alias 지정, String

- symbols (M): unique 한 value 지정, Array<String>

- default (O, default): default 값 지정

Array

- items (M): element의 type, String

- default (O, default): default 값 지정

map

- values (M): element의 type, String

- default (O, default): default 값 지정

default values

Example

Record

{

"type": "record",

"name": "LongList",

"aliases": ["LinkedLongs"], // old name for this

"fields" : [

{"name": "value", "type": "long"}, // each element has a long

{"name": "next", "type": ["null", "LongList"]} // optional next element

]

}Enum

{

"type": "record",

"name": "LongList",

"aliases": ["LinkedLongs"], // old name for this

"fields" : [

{"name": "value", "type": "long"}, // each element has a long

{"name": "next", "type": ["null", "LongList"]} // optional next element

]

}Serialized Data

import avro.schema

from avro.datafile import DataFileReader, DataFileWriter

from avro.io import DatumReader, DatumWriter

schema = avro.schema.parse(open("user.avsc").read()) # need to know the schema to write

writer = DataFileWriter(open("users.avro", "w"), DatumWriter(), schema)

writer.append({"name": "Alyssa", "favorite_number": 256})

writer.append({"name": "Ben", "favorite_number": 7, "favorite_color": "red"})

writer.close()

0000000 O b j 001 004 026 a v r o . s c h e m

0000020 a 272 003 { " t y p e " : " r e c

0000040 o r d " , " n a m e s p a c e

0000060 " : " e x a m p l e . a v r o

0000100 " , " n a m e " : " U s e r

0000120 " , " f i e l d s " : [ { "

0000140 t y p e " : " s t r i n g " ,

0000160 " n a m e " : " n a m e " }

0000200 , { " t y p e " : [ " i n t

0000220 " , " n u l l " ] , " n a m

0000240 e " : " f a v o r i t e _ n u

0000260 m b e r " } , { " t y p e " :

0000300 [ " s t r i n g " , " n u l

0000320 l " ] , " n a m e " : " f a

0000340 v o r i t e _ c o l o r " } ] }

0000360 024 a v r o . c o d e c \b n u l l

0000400 \0 211 266 / 030 334 ˪ ** P 314 341 267 234 310 5 213

0000420 6 004 , \f A l y s s a \0 200 004 002 006 B

0000440 e n \0 016 \0 006 r e d 211 266 / 030 334 ˪ **

0000460 P 314 341 267 234 310 5 213 6

0000471

사용성

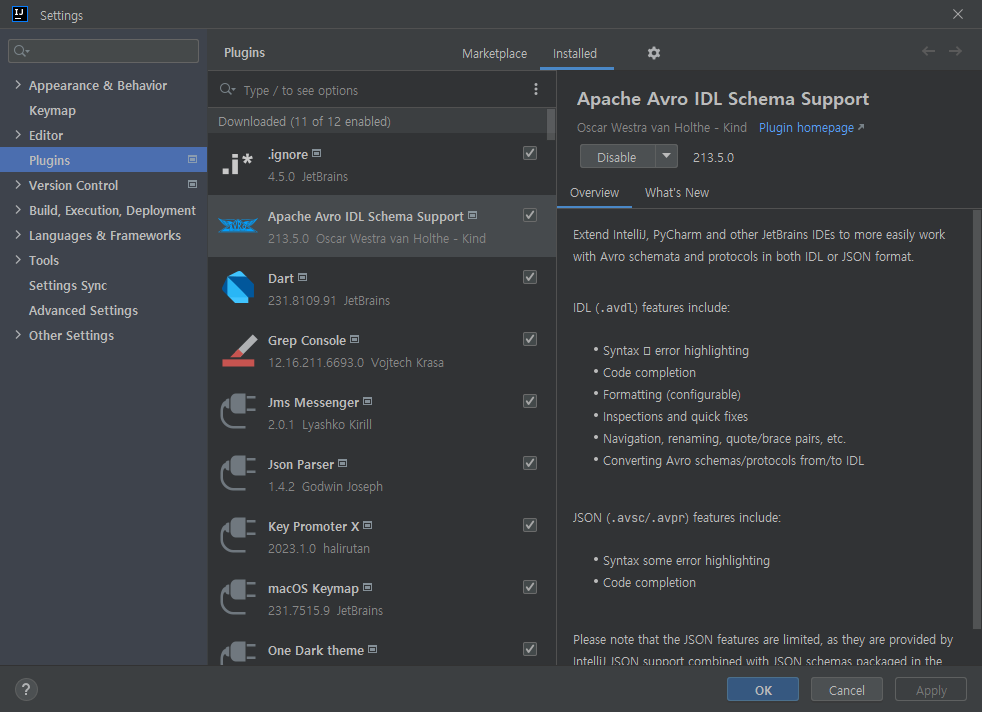

IDE Support

Import

....

<dependencies>

<dependency>

<groupId>org.apache.avro</groupId>

<artifactId>avro</artifactId>

<version>1.11.0</version>

</dependency>

</dependencies>

....

<build>

<plugins>

<plugin>

<groupId>org.apache.avro</groupId>

<artifactId>avro-maven-plugin</artifactId>

<version>1.11.0</version>

<executions>

<execution>

<phase>generate-sources</phase>

<goals>

<goal>schema</goal>

</goals>

<configuration>

<sourceDirectory>${project.basedir}/src/main/resources/avro/</sourceDirectory>

<outputDirectory>${project.basedir}/src/main/java/</outputDirectory>

</configuration>

</execution>

</executions>

</plugin>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>3.8.1</version>

<configuration>

<source>1.8</source>

<target>1.8</target>

</configuration>

</plugin>

</plugins>

</build>Schema 작성

{

"name": "Device",

"namespace": "com.xx.xxx.device",

"type": "record",

"fields": [

{

"name": "id",

"type": "long"

},

{

"name": "Capability",

"type": {

"name": "Capability",

"namespace": "com.xx.xxx.device.capability",

"type": "record",

"fields": [

{

"name": "name",

"type": "string"

},

{

"name": "value",

"type": "string"

}

]

}

}

]

}Serialize

private void serializeTestData() throws IOException {

Device device = Device.newBuilder()

.setId(1L)

.setCapability(Capability.newBuilder()

.setName("light")

.setValue("on")

.build())

.build();

DatumWriter<Device> deviceDatumWriter;

DataFileWriter<Device> dataFileWriter = null;

try {

deviceDatumWriter = new SpecificDatumWriter<>();

dataFileWriter = new DataFileWriter<>(deviceDatumWriter);

dataFileWriter.create(device.getSchema(), new File("test.avro"));

dataFileWriter.append(device);

} catch (IOException e) {

throw new RuntimeException(e);

} finally {

if (dataFileWriter != null) {

dataFileWriter.close();

}

}

}Deseiralize

private void deserializeTestData() throws IOException {

DatumReader<Device> deviceDatumReader = null;

DataFileReader<Device> dataFileReader = null;

try {

deviceDatumReader = new SpecificDatumReader<>(Device.class);

dataFileReader = new DataFileReader<>(new File("test.avro"), deviceDatumReader);

Device device = null;

while (dataFileReader.hasNext()) {

device = dataFileReader.next(device);

System.out.println(device);

}

} catch (Exception e) {

throw new RuntimeException(e);

} finally {

if (dataFileReader != null) {

dataFileReader.close();

}

}

}Result

REFERENCE

- https://docs.confluent.io/platform/current/schema-registry/index.html#about-sr

- https://medium.com/@stephane.maarek/introduction-to-schemas-in-apache-kafka-with-the-confluent-schema-registry-3bf55e401321

- https://medium.com/@gaemi/kafka-%EC%99%80-confluent-schema-registry-%EB%A5%BC-%EC%82%AC%EC%9A%A9%ED%95%9C-%EC%8A%A4%ED%82%A4%EB%A7%88-%EA%B4%80%EB%A6%AC-1-cdf8c99d2c5c

- https://avro.apache.org/docs/1.11.1/specification/

- https://11st-tech.github.io/2022/06/28/schema-registry-in-live11/

반응형

'개발 일지' 카테고리의 다른 글

| Kafka Connect 사용성 검토 (0) | 2023.05.24 |

|---|---|

| Kafka Schema Registry (0) | 2023.05.23 |

| 브라우저와 Redirect (feat. XMLHttpRequest) (0) | 2023.05.19 |

| Redis 설치 (ubuntu) (0) | 2023.04.26 |

| R2DBC history 및 issue (0) | 2023.04.21 |