Spring Cloud Sleuth란

Spring Cloud의 분산 트레이싱 솔루션으로 개발 오픈소스

Trace And Span

| name | desc |

| Trace | 전체 작업을 하나로 묶는 것 작업 당 64-bit ID 하나를 가진다. |

| Span | 분산 시스템에서 세부 작업, 다른 서비스, 다른 머신 또는 다른 쓰레드 등으로 나누어 질 수 있다. 각각 64-bit ID를 가진다. 최초의 Span은 traceId와 동일하며, root span으로 불린다. |

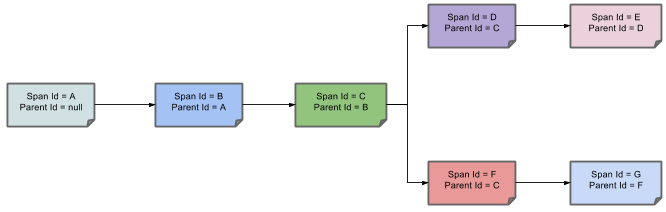

분산 시스템의 한 작업의 예이다.

전체 작업을 하나의 Trace로 묶고, 세부 작업을 Span으로 표현한다.

이를 통해 전체 프로세스를 한번에 tracing 할 수 있으며, 세부적인 작업에 대한 부분도 확인 가능하다.

전체 작업을 Span을 기준으로 tree 구조화 할 수 있다.

이는 span을 기준으로 flow를 추적하기 좋은 구조이다.

Log Sample

service1.log:2016-02-26 11:15:47.561 INFO [service1,2485ec27856c56f4,2485ec27856c56f4,true] 68058 --- [nio-8081-exec-1] i.s.c.sleuth.docs.service1.Application : Hello from service1. Calling service2

service2.log:2016-02-26 11:15:47.710 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Hello from service2. Calling service3 and then service4

service3.log:2016-02-26 11:15:47.895 INFO [service3,2485ec27856c56f4,1210be13194bfe5,true] 68060 --- [nio-8083-exec-1] i.s.c.sleuth.docs.service3.Application : Hello from service3

service2.log:2016-02-26 11:15:47.924 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Got response from service3 [Hello from service3]

service4.log:2016-02-26 11:15:48.134 INFO [service4,2485ec27856c56f4,1b1845262ffba49d,true] 68061 --- [nio-8084-exec-1] i.s.c.sleuth.docs.service4.Application : Hello from service4

service2.log:2016-02-26 11:15:48.156 INFO [service2,2485ec27856c56f4,9aa10ee6fbde75fa,true] 68059 --- [nio-8082-exec-1] i.s.c.sleuth.docs.service2.Application : Got response from service4 [Hello from service4]

service1.log:2016-02-26 11:15:48.182 INFO [service1,2485ec27856c56f4,2485ec27856c56f4,true] 68058 --- [nio-8081-exec-1] i.s.c.sleuth.docs.service1.Application : Got response from service2 [Hello from service2, response from service3 [Hello from service3] and from service4 [Hello from service4]]Trace Visualize

ZipKin

각 서비스에서 소요된 시간 및 호출정보를 한번에 파악할 수 있다.

에러에 대한 부분도 한번에 파악할 수 있다.

Kibana with logstash

Logstash를 통해 collect된 정보를 기반으로 kibana연동

동작 원리

Spring Cloud Sleuth는 2.0.0버전부터 Brave 라이브러리를 도입했다.

따라서 Tracing 및 ZipKin 통합 관련 동작은 Brave가 담당한다.

Sleuth에서 Brave 라이브러리를 활용하여, MDC에 특정 key로 trace정보를 propagation한다.

그 외 각 instrument 라이브러리에서 brave를 통해 관리되는 trace정보를 propagation할 tracing 객체들을 구현해 놓았다.

기본 동작 (Brave)

// Tracer(brave) 생성

new Tracer(...)

// Span 생성

tracer.newTrace().name("xxx").start(); // 인스턴스 span

tracer.startScopedSpan("xxx"); // Threadlocal 기반 span

// 다음 span 생성

tracer.nextSpan();

// propagation (to other system)

tracing.propagation()

.injector(Request::addHeader)

.inject(span.context(), request);

// span 종료

span.finish();;Tracer (Sleuth)

Spring Cloud Sleuth에서 Tracing을 위해 사용하는 핵심 Interface

Bean으로 만들어 다른 component들과 Integration한다.

Sleuth에서 구현체로 BraveTracer를 기본적으로 사용하며, bridge 패턴으로 구성되어 있다.

(내부적으로 brave 라이브러리의 Tracer를 가지고 Sleuth의 기능을 구현했다.)

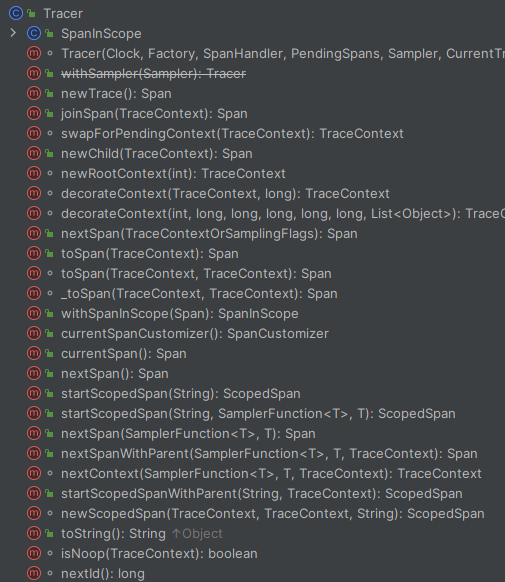

Tracer (Brave)

Brave에서 구현한 Tracer의 실제 동작을 담당하는 클래스

newSpan, nextSpan, newTraceContext, newChild 등 Tracing에 필요한 객체를 생성하고 관리할 수 있다.

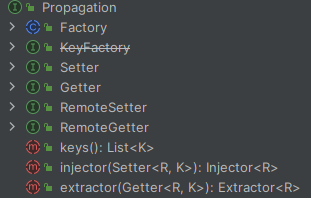

Propagation (brave)

brave에서 정의한 trace propagation을 위한 interface

일반적으로 Span을 다른 Object에 넘기기 위한 Injector와 다른 Object에서 Span을 추출하기 위한 Extractor interface와 함께 구현된다.

Propagation의 구현체들

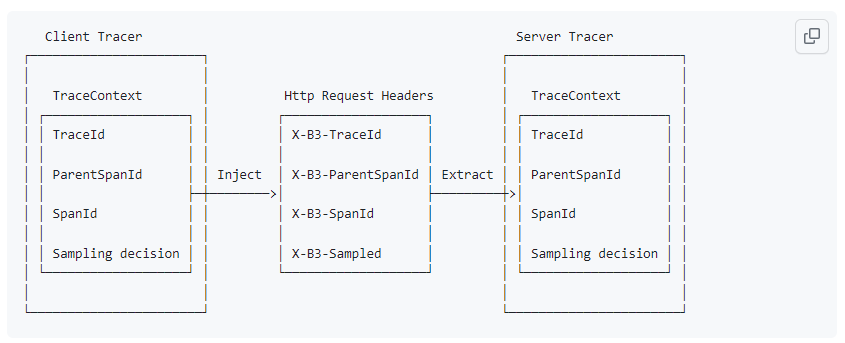

// B3 Propagation

분산 시스템 환경에서, 시스템 간 Tracing을 위한 스펙이 별도로 필요하다.

B3 Propagation은 Tracing Propagation의 스펙 중 하나이며, 많은 Tracing system과 호환이 된다.

주로 header에 키로 “X-B3-” 로 시작한다.

| Name | Mandatory(O / X) | Desc |

| traceId | O | 64 또는 128 bit의 traceId |

| spanId | O | 64 bit의 spanId |

| parentSpanId | X | 현재 spanId의 parent 일반적으로 parent가 없는 경우 (traceId == spanId) 생략한다. |

| SamplelingState | X | 현재 수신한 propagation을 Tracing System (ex. zipkin)에게 전파 할 지에 대한 Flag 값이다. · Defer: 수신 시스템이 알아서 판단 · Deny: 전파 X · Accept: 가능하면 전파 O · Debug: Debug모드로 전파 |

Propagation 방식

Multiple Headers

// http header

x-b3-traceid:"88666bfe25af4d12",

x-b3-spanid:"67ba768f6754994f",

x-b3-parentspanid:"88666bfe25af4d12",

x-b3-sampled:"0"Http header 같이 여러 값을 헤더에 넣기 좋은 경우 사용

위 attributes 중 해당되는 값을 넣는다.

// 위 예제의 smapled 0는 deny를 의미한다.

Single Header

// kafka header

b3: 3bce497d9c6833a4-e4efb90513184e29-0b3={TraceId}-{SpanId}-{SamplingState}-{ParentSpanId}

형식으로 전달한다.

ParentSpanId를 전파하는 이유

Tracing System에서 Tree 구조를 만들기 위해서 사용한다.

Tracing System으로 전파된 정보의 위치를 결정하기 위함

기술 검증

1. Thread Switching에 따른 Tracing 동작 확인

2. Rest 서버 간 Tracing 동작 확인 (WebClient)

3. Kafka Publish, Subscribe의 동작 확인

4. Rsocket Server / client 동작 확인

환경

JDK 11

Spring boot 2.7.0

Sring cloud Sleuth 3.1.9

Spring webflux

Spring data r2dbc

reactor-kafka 1.2.7.RELEASE

1. Webflux (Reactor Netty) Tracing

기본 Tracing 동작 확인

Reactor의 Thread 변경에 따른 Tracing 확인

관련 코드

관련 라이브러리를 import만 하면 Tracing 동작을 한다.

MDC를 통해 logback으로 연결할 수 있다.

build.gradle

...

implementation 'org.springframework.cloud:spring-cloud-starter-sleuth:3.1.9'

implementation 'org.springframework.boot:spring-boot-starter-webflux'

// Netty Reactor내 Tracing을 위한 라이브러리

implementation 'io.projectreactor.netty:reactor-netty-http-brave:1.1.9'

implementation 'org.springframework.boot:spring-boot-starter-data-r2dbc'

...RestRouter

...

@Bean

public RouterFunction<ServerResponse> traceRouterFunc() {

return route(GET("/trace/service"), traceHandler::traceService)

.andRoute(GET("/trace/r2dbc"), traceHandler::traceR2dbc)

.andRoute(GET("/trace/webclient"), traceHandler::traceWebClient)

.andRoute(GET("/trace/produce"), traceHandler::traceKafkaProduce)

.andRoute(GET("/trace/rsocket"), traceHandler::traceRsocket);

}

...TraceService

...

public Mono<ServerResponse> traceR2dbc(final ServerRequest serverRequest) {

log.info("traceService() start");

return this.r2dbcService.r2dbcSelectCall()

.flatMap(sampleData -> ServerResponse.ok().bodyValue(sampleData))

.doOnNext(serverResponse -> log.info("traceService() end"));

}

...R2dbcService

...

public Mono<SampleData> r2dbcSelectCall() {

return this.sampleRepo.findById(8)

.doOnNext(sampleData -> log.info("r2dbcSelectCall()"));

}

...시나리오

1. Http Request

2. R2DBC MariaDB Query

3. Http Response

log

2023-09-04 16:57:45.216 INFO [sleuthTest,20dc9c663477eddc,20dc9c663477eddc] 24344 --- [ctor-http-nio-4] c.e.l.sample.webflux.TraceHandler : traceService() start

2023-09-04 16:57:45.440 INFO [sleuthTest,20dc9c663477eddc,20dc9c663477eddc] 24344 --- [actor-tcp-nio-2] c.e.l.sample.r2dbc.R2dbcService : r2dbcSelectCall()

2023-09-04 16:57:45.444 INFO [sleuthTest,20dc9c663477eddc,20dc9c663477eddc] 24344 --- [actor-tcp-nio-2] c.e.l.sample.webflux.TraceHandler : traceService() end2. Rest Servers Tracing

Http 서버 간 Tracing 동작 확인

WebClient TraceId, SpanId 등 Header injection 동작 확인

Create SpanId 동작 확인

관련 코드

주의 사항 !

- WebClient는 Bean으로 관리되고 있어야 Header Injection이 동작

WebClientConfig

@Bean

public WebClient webClient() {

return WebClient.builder()

.build();

}WebClientService

public Mono<HashMap> traceWebClient() {

return webClient

.get()

.uri("http://xxxx")

.retrieve()

.bodyToMono(HashMap.class)

.doOnNext(hashMap -> log.info("qweasd"));

}서버2

...

@GetMapping("/test")

public Mono<Map<String, String>> cccc(@RequestHeader Map<String, String> headers) {

log.info("GET /test start");

headers

.forEach((key, value) -> log.info(key + " : " + value));

return Mono.just(Map.of("test", "success"));

}

...시나리오

1. 사용자 → 서버1 REST 요청

2. 서버1 → 서버2 REST 요청 (WebClient)

3. 서버2 → 서버1 응답

4. 서버1 → 사용자 응답

log

// 서버1 (before Request)

2023-09-04 17:20:04.372 INFO [sleuthTest,f988661d4e75a625,f988661d4e75a625] 17692 --- [ctor-http-nio-4] c.e.l.sample.webflux.TraceHandler : traceService() start

// 서버2

2023-09-04 17:20:04.494 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : GET /test start

2023-09-04 17:20:04.495 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : accept-encoding : gzip

2023-09-04 17:20:04.495 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : user-agent : ReactorNetty/1.0.19

2023-09-04 17:20:04.495 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : host : localhost:8080

2023-09-04 17:20:04.495 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : accept : */*

2023-09-04 17:20:04.495 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : X-B3-TraceId : f988661d4e75a625

2023-09-04 17:20:04.495 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : X-B3-SpanId : e72c2850246440e5

2023-09-04 17:20:04.495 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : X-B3-ParentSpanId : f988661d4e75a625

2023-09-04 17:20:04.495 INFO [,f988661d4e75a625,e72c2850246440e5] 18248 --- [ctor-http-nio-3] c.e.r.TestRestController : X-B3-Sampled : 0

// 서버1 (after Request)

2023-09-04 17:20:04.552 INFO [sleuthTest,f988661d4e75a625,f988661d4e75a625] 17692 --- [ctor-http-nio-4] c.e.l.sample.webClient.WebClientService : qweasd

2023-09-04 17:20:04.554 INFO [sleuthTest,f988661d4e75a625,f988661d4e75a625] 17692 --- [ctor-http-nio-4] c.e.l.sample.webflux.TraceHandler : traceService() end3. Kafka Pub / Sub Tracing

Kafka Producer의 header injection 확인

Kafka Consumer의 message header로 부터 traceId 연계 확인

관련 코드

Reactive환경이기에 reactor kafka를 대상으로 테스트

ProducerFactory를 extend한 TracingKafkaProducerFactory를 제공한다.

KafkaReceiver를 implements한 TracingKafkaReceiver를 제공한다.

build.gradle

...

implementation 'io.projectreactor.kafka:reactor-kafka:1.2.7.RELEASE'

implementation 'org.springframework.kafka:spring-kafka'

...KafkaConfig

@Bean

public KafkaSender<String, String> kafkaSender(final BeanFactory beanFactory) {

Map<String, Object> senderProps = new HashMap<>();

senderProps.put(ProducerConfig.BOOTSTRAP_SERVERS_CONFIG, "xxxx");

senderProps.put(ProducerConfig.KEY_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

senderProps.put(ProducerConfig.VALUE_SERIALIZER_CLASS_CONFIG, StringSerializer.class);

SenderOptions<String, String> senderOptions = SenderOptions.create(senderProps);

return KafkaSender.create(new TracingKafkaProducerFactory(beanFactory), senderOptions);

}

@Bean

public KafkaReceiver<String, String> kafkaReceiver(ReactiveKafkaTracingPropagator reactiveKafkaTracingPropagator) {

Map<String, Object> consumerConfig = new HashMap<>();

consumerConfig.put(ConsumerConfig.BOOTSTRAP_SERVERS_CONFIG, "xxxx");

consumerConfig.put(ConsumerConfig.GROUP_ID_CONFIG, "test-1");

consumerConfig.put(ConsumerConfig.KEY_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

consumerConfig.put(ConsumerConfig.VALUE_DESERIALIZER_CLASS_CONFIG, StringDeserializer.class);

consumerConfig.put(ConsumerConfig.AUTO_OFFSET_RESET_CONFIG, OffsetResetStrategy.LATEST.name().toLowerCase());

consumerConfig.put(ConsumerConfig.MAX_POLL_RECORDS_CONFIG, 10);

ReceiverOptions<String, String> receiverOptions = ReceiverOptions.create(consumerConfig);

return TracingKafkaReceiver

.create(reactiveKafkaTracingPropagator, receiverOptions

.atmostOnceCommitAheadSize(20)

.subscription(Pattern.compile("xxxx")));

}Producer

public Flux<Integer> sendMessage(final int count) {

return this.kafkaSender

.send(Flux

.range(1, count)

.map(i -> SenderRecord.create("chchoi.test.1", null, new Date().getTime(), null, i + "-" + UUID.randomUUID(), i)))

.doOnError(e -> log.error("send error", e))

.doOnNext(response -> log.info("response: cm: {}, rm: {}", response.correlationMetadata(), response.recordMetadata()))

.map(SenderResult::correlationMetadata);

}Consumer

@Override

public void onApplicationEvent(final ApplicationReadyEvent event) {

this.kafkaReceiver

.receiveAutoAck()

.concatMap(r -> r)

.doOnNext(stringStringConsumerRecord -> {

log.info("next....");

})

.onErrorContinue((e, o) -> log.error("error on consume", e))

.subscribe(record -> log.info("topic: {}, key: {}, value: {}", record.topic(), record.key(), record.value()));

}시나리오

1. REST 요청

2. Topic Messsage Produce

3. Consume Topic Message

log

// REST 요청 받음

2023-09-04 17:33:53.615 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ctor-http-nio-4] c.e.l.sample.webflux.TraceHandler : traceService() start

// init Producer

2023-09-04 17:33:53.626 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ctor-http-nio-4] o.a.k.clients.producer.ProducerConfig : ProducerConfig values:

acks = -1

batch.size = 16384

...

2023-09-04 17:33:53.632 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ctor-http-nio-4] o.a.k.clients.producer.KafkaProducer : [Producer clientId=producer-1] Instantiated an idempotent producer.

2023-09-04 17:33:53.643 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ctor-http-nio-4] o.a.kafka.common.utils.AppInfoParser : Kafka version: 3.1.1

// produce start

2023-09-04 17:33:53.644 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ctor-http-nio-4] o.a.kafka.common.utils.AppInfoParser : Kafka commitId: 97671528ba54a138

2023-09-04 17:33:53.644 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ctor-http-nio-4] o.a.kafka.common.utils.AppInfoParser : Kafka startTimeMs: 1693816433643

...

2023-09-04 17:33:53.687 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ single-1] c.e.l.sample.kafka.KafkaService : response: cm: 1, rm: chchoi.test.1-0@53

2023-09-04 17:33:53.687 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ single-1] c.e.l.sample.kafka.KafkaService : response: cm: 2, rm: chchoi.test.1-0@54

2023-09-04 17:33:53.687 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ single-1] c.e.l.sample.kafka.KafkaService : response: cm: 3, rm: chchoi.test.1-0@55

// produce end

// consume start

2023-09-04 17:33:53.746 INFO [sleuthTest,3bce497d9c6833a4,2f0175bf3ca03167] 7340 --- [ parallel-3] c.e.l.sample.kafka.ReadyListener : next....

2023-09-04 17:33:53.746 INFO [sleuthTest,3bce497d9c6833a4,2f0175bf3ca03167] 7340 --- [ parallel-3] c.e.l.sample.kafka.ReadyListener : topic: chchoi.test.1, key: null, value: 1-9ccf744f-052e-497b-bf92-556d8a501768

2023-09-04 17:33:53.746 INFO [sleuthTest,3bce497d9c6833a4,7435a497d71b90ab] 7340 --- [ parallel-3] c.e.l.sample.kafka.ReadyListener : next....

2023-09-04 17:33:53.746 INFO [sleuthTest,3bce497d9c6833a4,7435a497d71b90ab] 7340 --- [ parallel-3] c.e.l.sample.kafka.ReadyListener : topic: chchoi.test.1, key: null, value: 2-6c5ecc32-12d9-416b-8df3-79a6a892e411

2023-09-04 17:33:53.747 INFO [sleuthTest,3bce497d9c6833a4,ca941de8f65d2d36] 7340 --- [ parallel-3] c.e.l.sample.kafka.ReadyListener : next....

2023-09-04 17:33:53.747 INFO [sleuthTest,3bce497d9c6833a4,ca941de8f65d2d36] 7340 --- [ parallel-3] c.e.l.sample.kafka.ReadyListener : topic: chchoi.test.1, key: null, value: 3-72b7d2fc-5baf-4897-a65c-4748465706da

// consume end

// rest end

2023-09-04 17:33:53.747 INFO [sleuthTest,3bce497d9c6833a4,3bce497d9c6833a4] 7340 --- [ single-1] c.e.l.sample.webflux.TraceHandler : traceService() end

4. RSocket Server / Client Tracing

RSocket Server의 요청 받을 때 Tracing 동작 확인

RSocket Client의 요청할 때 traceId관련 injection 확인

관련 코드

RSocket 서버는 별도의 설정 없이 import 및 enable 만으로 Tracing 관련 로직이 동작한다.

client는 RSocketConnectorConfigurer의 구현체인 TracingRSocketConnectorConfigurer를 설정해줘야 한다.

build.gradle

...

implementation 'org.springframework.boot:spring-boot-starter-rsocket'

...RSocketServer

@MessageMapping("/test")

@NewSpan("testSSS")

public Mono<A> traceService() {

return Mono.just(new A("testResponseData"));

}RSocketClientConfig

@Bean

public RSocketRequester rSocketRequester(Propagator propagator, Tracer tracer) {

return RSocketRequester.builder()

.rsocketStrategies(RSocketStrategies.builder()

.decoder(new Jackson2JsonDecoder())

.build())

.rsocketConnector(new TracingRSocketConnectorConfigurer(propagator, tracer, true))

.dataMimeType(MimeType.valueOf("application/json"))

.transport(TcpClientTransport.create("localhost", 8000));

}RSocketClient

public Mono<A> traceRSocket() {

log.info("traceRSocket() start");

return this.rSocketRequester

.route("/test")

.data("qweasd")

.retrieveMono(A.class)

.doOnNext(a -> log.info("receiveFromRSocket: {}", a.getA()));

}시나리오

1. REST 요청

2. 서버1 → 서버2 RSocket 요청

3. RSocket 응답

4. REST 응답

log

// 서버1

2023-09-04 17:45:46.148 INFO [sleuthTest,95ab4dfd5ea0968b,95ab4dfd5ea0968b] 7340 --- [ctor-http-nio-4] c.e.l.sample.webflux.TraceHandler : traceService() start

2023-09-04 17:45:46.154 INFO [sleuthTest,95ab4dfd5ea0968b,95ab4dfd5ea0968b] 7340 --- [ctor-http-nio-4] c.e.l.sample.rsocket.RSocketService : traceRSocket() start

2023-09-04 17:45:46.253 DEBUG [sleuthTest,95ab4dfd5ea0968b,95ab4dfd5ea0968b] 7340 --- [actor-tcp-nio-3] io.rsocket.FrameLogger : sending ->

Frame => Stream ID: 0 Type: SETUP Flags: 0b0 Length: 75

Data:

2023-09-04 17:45:46.280 DEBUG [sleuthTest,95ab4dfd5ea0968b,95ab4dfd5ea0968b] 7340 --- [actor-tcp-nio-3] io.rsocket.FrameLogger : sending ->

Frame => Stream ID: 1 Type: REQUEST_RESPONSE Flags: 0b100000000 Length: 95

Metadata:

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| fe 00 00 06 05 2f 74 65 73 74 fd 00 00 19 94 95 |...../test......|

|00000010| ab 4d fd 5e a0 96 8b 1f 0e e7 1a 2e 31 9c ce 95 |.M.^........1...|

|00000020| ab 4d fd 5e a0 96 8b 01 62 33 00 00 23 39 35 61 |.M.^....b3..#95a|

|00000030| 62 34 64 66 64 35 65 61 30 39 36 38 62 2d 31 66 |b4dfd5ea0968b-1f|

|00000040| 30 65 65 37 31 61 32 65 33 31 39 63 63 65 2d 30 |0ee71a2e319cce-0|

+--------+-------------------------------------------------+----------------+

Data:

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 71 77 65 61 73 64 |qweasd |

+--------+-------------------------------------------------+----------------+

2023-09-04 17:45:46.396 DEBUG [sleuthTest,95ab4dfd5ea0968b,95ab4dfd5ea0968b] 7340 --- [actor-tcp-nio-3] io.rsocket.FrameLogger : receiving ->

Frame => Stream ID: 1 Type: NEXT_COMPLETE Flags: 0b1100000 Length: 30

Data:

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 7b 22 61 22 3a 22 74 65 73 74 52 65 73 70 6f 6e |{"a":"testRespon|

|00000010| 73 65 44 61 74 61 22 7d |seData"} |

+--------+-------------------------------------------------+----------------+

2023-09-04 17:45:46.404 INFO [sleuthTest,95ab4dfd5ea0968b,95ab4dfd5ea0968b] 7340 --- [actor-tcp-nio-3] c.e.l.sample.rsocket.RSocketService : receiveFromRSocket: testResponseData

2023-09-04 17:45:46.404 INFO [sleuthTest,95ab4dfd5ea0968b,95ab4dfd5ea0968b] 7340 --- [actor-tcp-nio-3] c.e.l.sample.webflux.TraceHandler : traceService() end

// 서버2

2023-09-04 17:45:46.288 DEBUG [,,] 18248 --- [ctor-http-nio-4] io.rsocket.FrameLogger : receiving ->

Frame => Stream ID: 0 Type: SETUP Flags: 0b0 Length: 75

Data:

2023-09-04 17:45:46.315 DEBUG [,,] 18248 --- [ctor-http-nio-4] io.rsocket.FrameLogger : receiving ->

Frame => Stream ID: 1 Type: REQUEST_RESPONSE Flags: 0b100000000 Length: 95

Metadata:

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| fe 00 00 06 05 2f 74 65 73 74 fd 00 00 19 94 95 |...../test......|

|00000010| ab 4d fd 5e a0 96 8b 1f 0e e7 1a 2e 31 9c ce 95 |.M.^........1...|

|00000020| ab 4d fd 5e a0 96 8b 01 62 33 00 00 23 39 35 61 |.M.^....b3..#95a|

|00000030| 62 34 64 66 64 35 65 61 30 39 36 38 62 2d 31 66 |b4dfd5ea0968b-1f|

|00000040| 30 65 65 37 31 61 32 65 33 31 39 63 63 65 2d 30 |0ee71a2e319cce-0|

+--------+-------------------------------------------------+----------------+

Data:

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 71 77 65 61 73 64 |qweasd |

+--------+-------------------------------------------------+----------------+

2023-09-04 17:45:46.387 DEBUG [,95ab4dfd5ea0968b,acab5cb6dc4c8625] 18248 --- [ctor-http-nio-4] io.rsocket.FrameLogger : sending ->

Frame => Stream ID: 1 Type: NEXT_COMPLETE Flags: 0b1100000 Length: 30

Data:

+-------------------------------------------------+

| 0 1 2 3 4 5 6 7 8 9 a b c d e f |

+--------+-------------------------------------------------+----------------+

|00000000| 7b 22 61 22 3a 22 74 65 73 74 52 65 73 70 6f 6e |{"a":"testRespon|

|00000010| 73 65 44 61 74 61 22 7d |seData"} |

+--------+-------------------------------------------------+----------------+

Conclusion

간편하게 tracing 관련 연계를 구성할 수 있다.

설정이 조금 까다롭지만, 스펙도 명확하고, 역할도 명확해서 도입에 문제가 없을 것으로 판단된다.

error 처리 및 tracing의 시각화는 더 조사해 보아야 한다.

REFERENCE

docs

https://docs.spring.io/spring-cloud-sleuth/docs/2.2.9.BUILD-SNAPSHOT/reference/html/#introduction

https://docs.spring.io/spring-cloud-sleuth/docs/current-SNAPSHOT/reference/html/integrations.html

https://docs.spring.io/spring-cloud-sleuth/docs/2.2.9.BUILD-SNAPSHOT/reference/html/appendix.html

netty example

https://github.com/btkelly/Sleuth-Netty-Example/blob/master/build.gradle

producer example

https://github.com/spring-cloud-samples/spring-cloud-sleuth-samples/tree/main/kafka-reactive-producer

consumer example

https://github.com/spring-cloud-samples/spring-cloud-sleuth-samples/tree/main/kafka-reactive-consumer

rsocket

https://github.com/rsocket/rsocket/blob/master/Extensions/WellKnownMimeTypes.md

org.springframework.cloud.sleuth.instrument.rsocket.TracingRSocketConnectorConfigurer

https://micrometer.io/docs/tracing

'개발 일지' 카테고리의 다른 글

| Spring Cloud Gateway Code 분석 (2) | 2023.10.28 |

|---|---|

| Spring Webflux, HttpHandler code 분석 (0) | 2023.10.27 |

| API Documentation 정리 (oas,swagger,asyncAPI) (0) | 2023.08.02 |

| mysql-connector with mariaDB 버전 호환성 이슈 (0) | 2023.07.26 |

| Kafka Consumer #4 Offset Commit Strategy (0) | 2023.06.22 |